Generalized Grasping with Sawyer

Introduction

Grasping objects is one the central challenges of robotics, finding applications in nearly usage of robotics from industry to medicine. It is an active area of research, and considered an exceptionally difficult task in the field of Reinforcement Learning.

The application and reputation of grasping inspired us to form our own approach to the problem. The goal of our project was to design our own algorithm to detect, localize, and grasp general objects in a controlled environment. Through our design choices, we sought to maximize the set of objects we could grasp. To do this, we tackled problems of detecting general objects independent of shape and size, determining grasping angles, and controlling the end-affector to always grab the object without collisions. This led to an original implementation designed and implemented independently of similar research.

This project can be applied to any application requiring grasping of objects that cannot be determined beforehand. For example, it can be used for robot arms in industrial plants that need to move over several different objects, in emergency situations where rubble can take on any shape, or in medicine where there are hundreds of different tools.

Design

Criteria

When we set out, our goal was to grasp objects with as few restrictions as possible. This meant that maximizing the size of the set of graspable objects was a top priority in evaluating a particular design choice.

We wanted to balance the flexibility of our approach with its difficulty. This motivated our choice to use a Kinect sensor for primary data input vs something more flexible, like a camera. Using a Kinect allows for detection and localization of general objects (described in detail in the Image Pipeline).

From the beginning, we knew we wanted to mount the Kinect looking down over the table from above. this motivated our entire hardware design, and our rationale for that choice is listed below.

Lastly, we wanted to make our attachment easy to attach and detach, as we would have to do this each time we went to the lab and wanted to avoid wasting as much time as possible.

Details

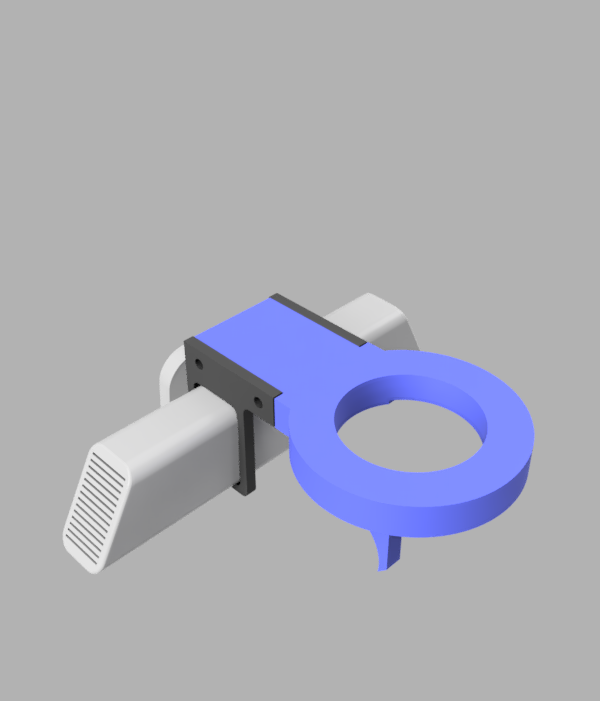

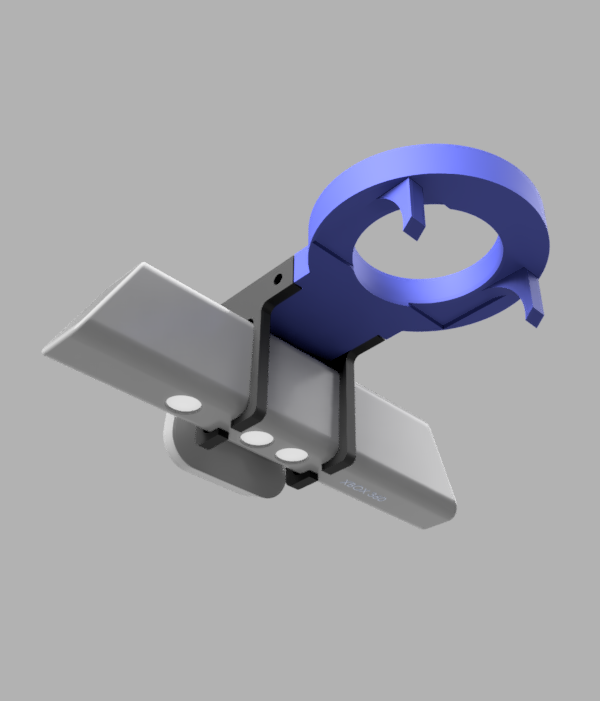

Our overall design featured a 3D-printed cuff which we attached to Sawyer’s wrist. On this, we mounted a Kinect sensor, pointing downwards. we then installed an electric parallel gripper with the shorter gripper components onto the Sawyer.

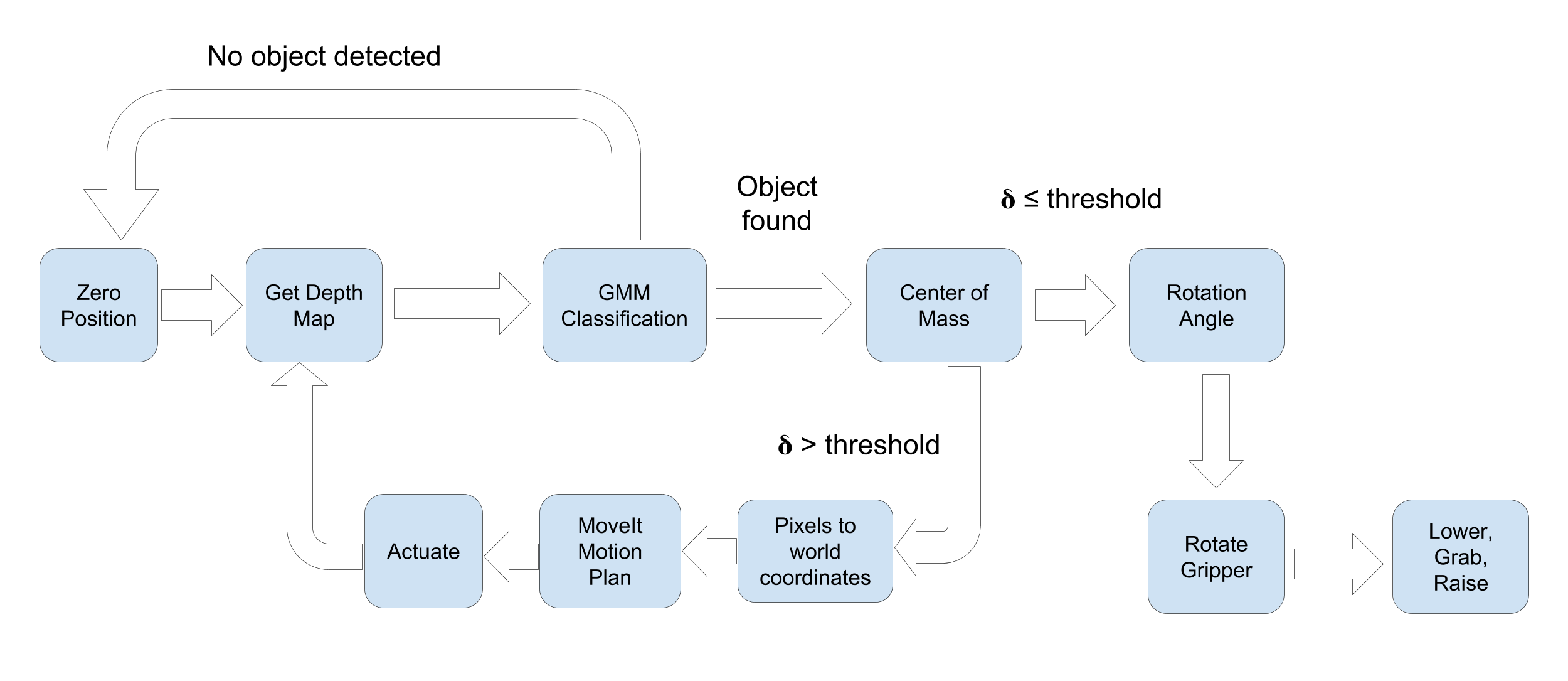

Our software can be split up into two primary components, data processing and actuation. For the data processing, we created a custom image pipeline (described in detail in the implementation section) to find the optimal grip location and gripper angle for each object placed on the table. We then moved the arm to a predefined ‘zero’ location, scanned the table, and dispatched control the the execution portion of our code.

The execution component is primarily our motion controller. This is a relatively simple proportional controller, however we found this to be very successful in practice so we did not want to add excess complexity here. This controller produced a desired location (in world coordinates) based on the output of our data processing, and then we used MoveIt IK to create a motion plan to that location. We constrained motion to prevent the end effector from hitting the table or object, and we found this did not significantly effect the success rate of the motion planner. After we moved, we would scan the table again. If for some reason, we did not see the object, we would return to our zero location to reset. If we were suitably close to the ideal grip location, we would rotate the gripper and attempt the grip. Otherwise, control would be given back to the data processing phase.

Once we had decided to grip an object, we would rotate the gripper to the optimal grip angle (given from our data processing), then move the gripper down around 70% of the total z-height in an unconstrained manner. We then constrained the orientation of the gripper to be vertical and descended the remaining amount. We found that splitting up the descent in this way dramatically increased the success rate of that motion plan.

Once we had descended, we closed the gripper and returned to the zero location, hopefully having grasped the object.

Decisions

Our most important decision came early in the project, and it was also the one with the most tradeoffs. We struggled to onboard with the Reflex Takktile Gripper, and had to decide if we wanted to keep pushing and try to work with it, or if we wanted to switch to the electric parallel gripper. When considering this decision with our design criteria, we were particularly concerned about the loss of degrees of freedom in the gripper if we switched to the parallel gripper and how that would impact the set of objects we could grasp.

We ended up choosing the parallel gripper, but put extra effort into designing our image processing algorithm and controller in order to still grasp a wide range of objects. As you can see in our results section, we are very happy that this decision was in hindsight a good one, as we were still able to pick up spheres, hats, and other complicated objects, which was exactly where we thought the parallel gripper would struggle. This decision also was a favorable one on the cost front, the Takktile gripper is many thousands of dollars, which is much more than the parallel gripper.

There were several decisions to be made with the image pipeline as well. One of our goals was to avoid using AR Tags and instead detect and localize objects using raw sensor data. This led us to decide between using a camera or the Kinect. When making this decision, we also had to factor in the prospect of detecting any possible object. For this reason, we opted for the Kinect as the sensor data followed a bimodal distribution that lended itself well to detecting, segmenting, and localizing and reasonable object of interest.

One of our large decisions was the assumptions of objects we use. Our goal was to maximize the number of objects we could pick up using a parallel gripper, while also maintaining a feasible scope. Thus, we made two assumptions. The first is that the objects of interest have a uniform density. This allows us to estimate the center of mass. Additionally, when deciding the angle to grasp at, we assume objects are simply connected, meaning our grasps wil always grip from two outer points of the object. The implications of these assumptions are detailed in the Image Pipeline section; however, we found that these assumptions did not limit our algorithm's ability to grasp reasonable objects. As an additional note, our algorithm requires optimizing on non-convex, and even non-continuous, functions. We tried simulated annealing and grid search, but due to both deprecations is simulated annealing libraries and the effectiveness of grid search, we opted for the latter.

From the outset, we envisioned scanning the table once, moving to the optimal grip location, and gripping. As we began work on the project, we realized this was infeasible and we were not able to accurately move to the grip target in one motion. Our next approach was to use a proportional controller, which would refine the arm’s position iteratively at the cost of scanning the table multiple times per grasp, a somewhat slow process. We found this approach to be very successful, particularly in how it permitted us to move the objects after the arm scanned the table (a form of robustness). After we got this working, we carefully monitored execution to watch for behavior that would tell us we needed to add D or I correction to the controller (for instance, oscillatory behavior). We did not see any of this behavior, but this is an area where we could increase robustness and durability in the future.

We also had to decide how to get data from our Kinect, we had to choose between creating a new end effector interface on Sawyer, and mounting the Kinect on a tripod. We quickly settled on the former for the following reasons

- The tripod approach is very sensitive and prone to setup details and tuning

- One of our goals with this project was to be robust to variations in the environment, specifically with different shaped objects positioned in different parts of the grasping region. If we relied on manually setting up the Kinect on a tripod somewhere in the room before each attempt, not only would we spend a great deal of time making sure it was in exaclty the correct position (or tuning programmatic constants), we would also risk us or others in the lab bumping the tripod and forcing us to recalibrate frequently

- The angle of scans produced from a Kinect-mounted tripod is suboptimal

- When we were determining where to move the arm to grasp an object and also how to grasp it, we relied heavily on the top-down nature of our scans, they enabled us to see the object from the same perspective our gripper would be seeing it from, and also allowed us to change the angle from which we saw the object as we adjusted the robot arm. This feedback proved especially vital to our final approach, specifically in that it allowed us to use a closed loop controller for arm movement, which dramatically improved our results over earlier attempts.

- Furthermore, we would not be able to distinguish gripping patterns for wide classes of objects if we used the tripod approach In the case of the rectangular box, having the overhead view allowed us to find the short axis of the box which was the only direction we were able to grip it in. For more complicated objects like the hat, its irregular structure would have been impossible to grasp without an overhead view.

- With an eye to future work, when the object's top becomes highly textured, such as in the case of a lego block, it would be useful to incorporate diffent Kinect scan angles to improve the resolution and grasp quality of our approach. However, putting the Kinect on the tripod fixes the data angle, and would prevent us from pursing such a modification in the future.

Analysis

Robustness:

When analyzing our design in the context of robustness, the hardware (with a slight modification to use screws) is quite robust. it sits out of the way for the most part, is on the end effector which means the robot can reposition the sensor during execution, and the Kinect is mounted in such a way to get an optimal view of the object to be grasped.

The use of the Kinect itself is also quite robust, in that the sensor records accurate depth readings, compared to something like a single or dual camera, where the former requires bleeding-edge research to perform successful grasping and the latter is very prone to calibration issues and fine tuning.

One benefit of using a Kinect is that the depth values in the depth map follow a mixture of two Gaussians (one for the object, one for the background). This allows the same Gaussian Mixture Model algorithm to detect any object that may lie on the table, regardless of shape or size. Additionally, object can be mostly out of frame, but as long as a part of the object is sensed, the location of the object can be determined and the arm can be positioned to get a better sensing of the object.

Additionally, we designed an optimization problem to determine at which angle to grasp. This optimization problem doesn't require anything other than a segmentation of the object. Because of this generality, we were able to find reasonable angles to grasp nearly any object that followed our assumptions.

Using a Kinect had a few drawbacks. Any objects that were too close could not be detected, and additionally the field of view is rather limited. If our detection algorithm could not find any object with reasonable certainty, we moved our arm back to a 'zero' position we determined before hand, allowing it to rescan the surface from the center of the table. This made the algorithm able to correct for any mistakes it made without creating unpredictable behavior.

One particular way in which we could increase robustness is to lock the Kinect side to side within its clips better. this would be easily achieved by adding a feature to the clips that would capture stand the Kinect is intended to sit on, and was considered during our project’s design but was decided against for time reasons.

Choosing a better sensor would increase robustness, but was not an option for use given the resources available. The Kinect sensor was optimized for tracking people in a living room setting, which meant that we had to hold the Kinect relatively far away from the table to get data, which somewhat restricted the movement of our arm. Choosing a different depth sensor optimized differently would have helped this.

Durability:

From a durability perspective, our design is relatively durable in that there aren’t many moving parts outside of Sawyer. We could increase the infill in the 3d printed components, or perhaps switch to metal there to really increase the physical durability, but this would only be needed in a much more hostile environment. We also could better constrain our arm’s motion and detect and recover better from errors during execution, perhaps by alerting users about a problematic environment, but overall our design is relatively durable. One key way in which we would like to increase our durability is to create a custom end effector model that would incorporate our Kinect mount. We found that occasionally our motion plans would have the Kinect sensor interfere with the Sawyer arm, so by adding a new end effector model, we could use Sawyer’s prewritten code to avoid self-interferences.

Cost:

A final key component in a real-world design analysis is cost. Outside of the Sawyer, our approach is extremely cost efficient, especially if a cheaper depth sensor was used. The total cost of our 3D printed parts was about $7, and if being made in bulk would be even cheeper. Other than the Kinect sensor, no other part of our approach cost anything at all.

Implementation

Hardware

There are two primary components to our design, the blue cuff in the center of the image, and the two black clips which retain the Kinect sensor itself. These are bolted onto the blue cuff. All plastic parts were 3D printed, we used both the Cory Supernode and the Moffit Makerspace

We choose to design a cuff that sat around the metal wrist of Sawyer for a couple reasons. Firstly, we wanted to make installation and removal of our interface quick and easy, so avoiding more screws and bolts was appealing. Secondly, because the wire connecting the parallel gripper to Sawyer is very short, it would be challenging to slip an interface plate between the gripper and the arm, to say nothing of having to get new longer screws to screw the gripper on that would be long enough to pass through our interface. However, because we were no longer using screws, we faced the issue of keeping our interface in the same position for each round. We solved this using two techniques.

To try to constrain our interface rotationally, we started by simply designing the part to have close to a friction fit on the arm, which then let us add tape to the inside of the part, producing a good friction fit. Note the curved plastic arcs coming off the blue piece in the image, these were used to start the interface in the same position each time we installed the gripper. We would position the blue plastic piece in such a way around the cuff that one of the arcs would be in contact with the electric parallel gripper. Originally our hope was that these arcs would snugly fit around the curve in the gripper, but that proved too challenging to design and the friction-fit approach worked suitably well that we continued with that.

The black Kinect clips are designed to tightly fit around the Kinect, and are designed a few millimeters too thin in order to utilize the flexing of the plastic to keep the Kinect in position. Experimentally, they kept the Kinect from slipping even when held such that the Kinect opening was parallel to the floor

As a future extension, it would be good to change this part to one that is screwed onto the robot arm itself to increase repeatability of our approach. We made that tradeoff primarily for time reasons for this project, but a rigidly attached end effector would increase reliability and its disadvantages would be mitigate by not having to install and uninstall it frequently.

Image Pipeline

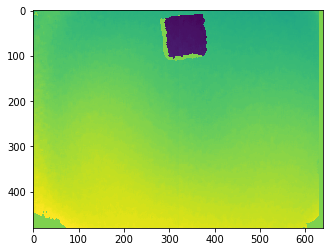

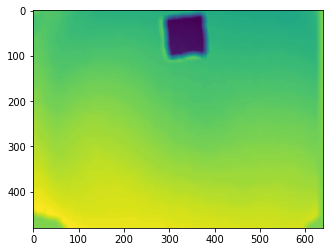

(1): Depth map of a box on a table. Solid green areas correspond to errored values.

Kinect Depth Map and Error Correction:

At the start of the loop, we collect a depth map of the area of interest from the Kinect using the Freenect library. The sensor from the Kinect is less than perfect. In addition to noise, the Kinect sets the value of pixel's it can't accurately detect depth for to 2048. The values that we care about are generally within the range of 350 and 700. We ignore any values that are clipped to 2048 for classification purposes, and treat these values as part of the background.

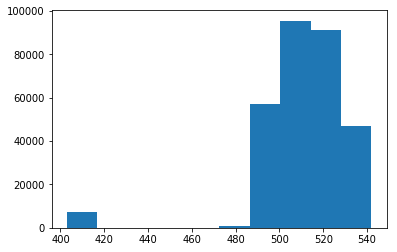

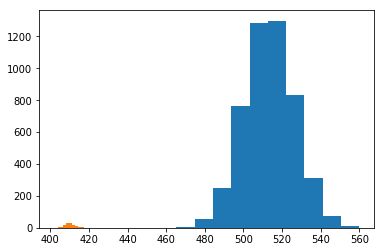

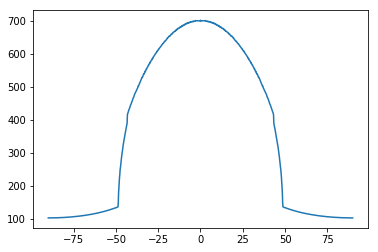

(1): The histogram of depth values observed.

(2): Histogram of 2000 samples from the estimated mixture.

Gaussian Mixture Model:

The depth values within the image typically follow a bimodal distribution; a smaller mode for the depth of the object and a larger mode for the depth of the background. This distribution is typically roughly Gaussian, thus we can calssify each pixel using a Guassian Mixture Model to create an image segmentation of object versus background.

We assume the distribution of depth values for the object are i.i.d. and follow the mixture of two Gaussians, where one Gaussian corresponds to the object and the other to the background. Let \(v_{ij}\) be the observed value at \((i,j)\), we then maximize our likelihood over possible mixtures of two Gaussians. $$\max \limits_{\phi_o, \phi_b, \mu_o, \mu_b, \sigma_o, \sigma_b} \prod_{i,j} p(v_{ij}) \; : $$ $$p = \phi_o \mathcal{N}(\mu_o, \sigma_o) + \phi_b \mathcal{N}(\mu_b, \sigma_b)$$ $$\phi_o + \phi_b = 1, \; \phi_o \geq 0 , \; \phi_b \geq 0$$ This problem can be solved within very few iterations of Expectation Maximization implemented in Scikit Learn (we found 10 iterations to be consistent). Also, while our assupmtions of the depth values being i.i.d. worked in practice, it is a strong assumption, and future work may explore using conditional probabilities or connectivity for classification.

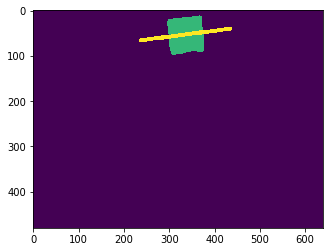

(1): Low pass of the depth map.

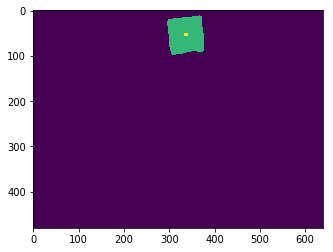

(2): Resulting segmentation (green is object, purple is background) and center of mass (yellow).

Segmentation and Center of Mass:

Once we converge to a viable mixture, we can use it for classification. First, we low pass the image to remove high frequency noise and soften the edges. We found this led to cleaner segmentations than classifying on the original image. To low pass the image, we used a Gaussian Blur from OpenCV. We then use our converged mixture model to classify each pixel independently.

Let \(\phi_o^*, \phi_b^*, \mu_o^*, \mu_b^*, \sigma_o^*, \sigma_b^*\) be the optimal parameters to the mixture optimization problem. Our object's depth values should be smaller than the depth values of the background, so without loss of generality let \(\mu_o^* \leq \mu_b^*\). Defining \(p_o = \mathcal{N}(\mu_o^*, \sigma_o^*)\) and \(p_b = \mathcal{N}(\mu_b^*, \sigma_b^*)\), we clasify a pixel value \(v_{ij}\) using the function $$f(v_{ij}) = \begin{cases} 1 \; \; \; \; \; \; \; \phi_o^* p_o(v_{ij}) \geq \phi_b^* p_b(v_{ij}) \\ 0 \; \; \; \; \; \; \; \phi_o^* p_o(v_{ij}) < \phi_b^* p_b(v_{ij})\end{cases}$$ To find the center of mass of the object, we first assume the object has uniform density. Letting the normalization of the segmentation be a joint probability distribution over the indices, the center of mass is then the expected index. $$\begin{bmatrix} c_x \\ c_y \end{bmatrix} = \frac{1}{\sum_{i,j} f(v_{ij})}\sum_{i,j} f(v_{ij}) \begin{bmatrix} i \\ j \end{bmatrix}$$

If the object is predicted to be strangely large or small, the observation is ignored and no action is performed. Otherwise, this center of mass is passed to the controller to move the end-affector of the Sawyer (more details below).

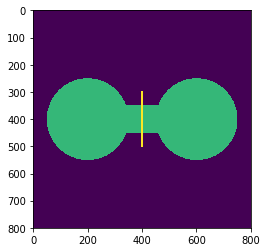

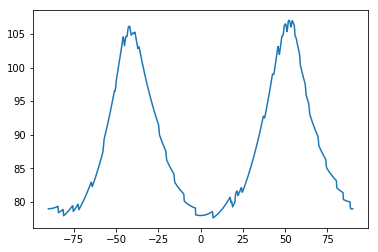

(1): Synthetic dumbell (green) with the angle our algorithm would grasp at.

(2): Plot of the objective function w.r.t. theta. Optimum is achieved at \(\theta = \pm \frac{\pi}{2}\).

(3): Real box (green) with grasp angle (yellow).

(4): Plot of the objective function w.r.t. theta. Optimum is achieved at \(\theta \approx \frac{\pi}{25}\).

Grasp Angle:

Because our gripper was the parallel gripper, adapting to specific objects mainly required determining at which angle to grasp the object.

First we continue to assume the object has uniform density. We make an additional assumption that the object is simply connected. We then center the grasp above the detected center of mass, as this would balance the mass on either side of the gripper.

Before lowering the end-affector, we determine an angle to rotate the gripper by. We approached this problem using a heuristic and approximating a corresponding optimization problem. When picking up objects that are uniform density, a natural approach would be to grab the object around the plane passing through the center of mass that minimizes the cross sectional area. For example, a human picks up a dumbell around the small cylinder connecting the two large pieces, as opposed to across the ends of the two large pieces. We now formalize a way to solve for this heuristic.

First, we define the indicator function \(f: \mathbb{R}^2 \rightarrow {0, 1}\) that is centered at the center of mass (center of mass corresponds to \((0,0)\)) where \(f(x,y) = 1\) if the object occupies \((x,y)\) and \(f(x,y)=0\) otherwise.

A line passing through the origin at angle \(\theta\) is then defined as $$\mathcal{L(\theta)} = \left \{ (t \cos \theta , t \sin \theta) \; | \; t \in \mathbb{R} \right \}$$ Our goal is to find a line passing through the origin that minimizes the intersection of the line and the object. Thus, our optimization problem is $$\theta^* = \argmin \limits_{\theta \in \left [\frac{-\pi}{2}, \frac{\pi}{2} \right]} \int_{-\infty}^{\infty} f(t \cos \theta, t \sin \theta) \text{d}t$$ The objective function is \(\pi\)-periodic, allowing us to restrict the domain. In general, the objective function is not convex on \(\theta\). However, for reasonable applications, grid search is effective at finding a close to optimal solution. Additionally, if the object is a convex set, the objective function is Lipschitz-continuous on \(\theta\) allowing for a theoretical guarantee of obtaining the optimal solution using bisection method. Using Scypy's integration capabilities, this integeral can be computed rather quickly.

After having found an angle to grasp at, the end-affector is rotated by that angle and then lowered to the object. For objects that aren't simply connected (such as a donut), this algorithm might have a harder time as it would attempt to grasp around the entire object as opposed to using the holes in the object to its advantage. This is an area we hope to explore more in the future.

Controller

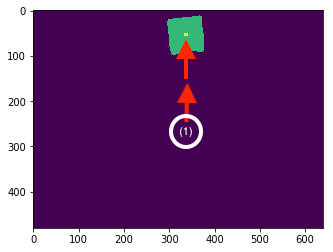

(1): the initial scan position (approximate)

The principle challenge our controller had to solve was that of converting the dx,dy in the image (measured in pixels) to a dx, dy in the real world, measured in meters. We noticed that the images freenect (our open Kinect interface) produced were created based on the following rule:

the value at pixel (i,j) corresponds the distance that point is in the real world from the sensor, in millimeters

We interpreted this rule to mean that the images were in some way processed to have a useful structure, and then tuned our controller constant to take as input the dx,dy (pixels) and output dx,dy(meters), which we found to work well experimentally

If this controller ended up not working, we considered decaying our controller constant harmonically but this presented the tradeoff that we would not be able to move the object on the workspace after execution began, because the constant would have already decayed. We also considered using a PID controller, but found that the added complexity was not necessary here

System Diagram

Results

Final Product

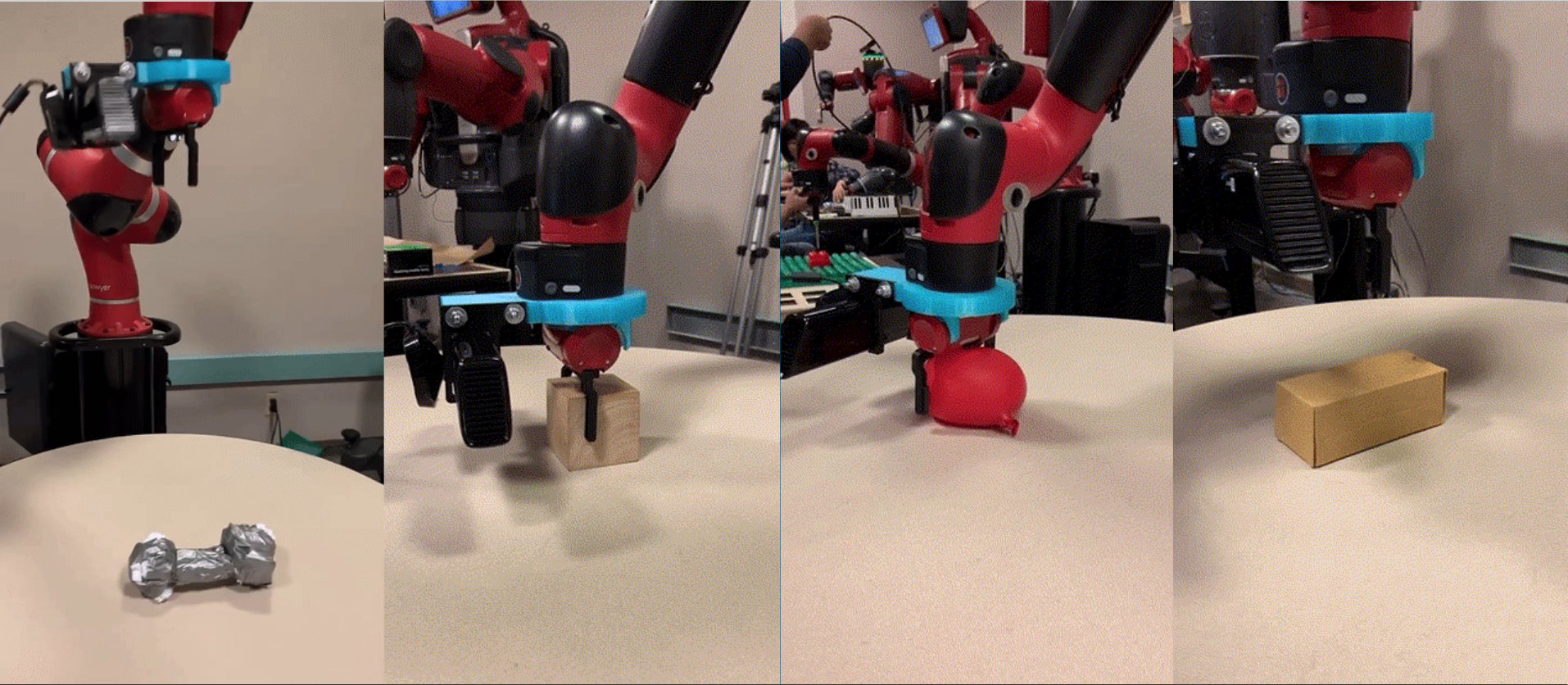

Putting everything together, our system is able to perform robustly in grasping and lifting a variety of objects. Upon testing on different shapes and positions for objects, we found that our system was able to reliably locate, actuate to the correct position, rotate to the correct orientation, and grasp the object on several iterations. In practice, we saw that regardless of the amount of synthetic (sometimes adversarial) movement/rotation of the object, our system was able to use the designed controller in order to perform its task and find and grasp the object. In order to test the various aspects of our system, and to test the robustness along each axis of our goal, we present scenarious below in which the system is robust to a different category of difficulty, and what it must have done correctly in order to achieve a proper grasp.

Detail: Sphere Grasping

The first example we look at is that of a spherical object, in this case, a tennis ball. If we break our actuation system down in to two different aspects related to the grasping of the object itself: translation and rotation, we can see that in the case of a spherical object, translational correctness if far more important than rotational correctness. This is true because if we translationally are imprecise in our movement, the parallel gripper will attempt to grip off center of the sphere and will slip off of the object, but any rotation that our system decides to make will not have an effect(as the object is rotationally symmetrical). In the video to the left, we can see that our system is able to grasp the sphere with precise translational movement.

Detail: Rectangular Grasping

The second example we look at is that of a rectangular prism shaped object, a cardboard box. Similarly, we can break our actuation system down into two parts as in the previous example and see that for this object, our system can be given slack on translational correctness, but must be rotationally precise due to the shape of the object. For this shape, any grasp along the long side of the box can be done to achieve a good grasp, but any rotation that does not have an angle for which the parallel grippers lie directly on opposite sides of the long side of the box will result in either a complete inability to lift the object or slipping along the sides which may also lead to a fail unless the object is coerced into a position in which the parallel grippers are able to grab the object (which is still not ideal). In the video to the left, we see that our system is able to grab the box with precise rotational movement.

Detail: Non-uniform Grasping

The third example we look at is that of an object that is not uniform in shape and has a surface that may be difficult to sense for our system, a hat. If we look at our sensing system, we can see that we rely upon a Gaussian Mixture Model (GMM) in order to estimate the difference between the object on the table and the table itself. If we think about the surface of the hat we use, we can see that it is composed of more than one surface and that the GMM may have trouble understanding difference in levels. However, by using thresholding for our histogram data we collect, we are able to reliably understand the surface of our object and use it for our rotation and movement calculations. In the video to the left, we see that our system is able to grab the hat, but does have slight trouble locking onto the object as the surface is a confusing shape to understand given the Kinect data, and our process to separate the object from the table.

Detail: Non-Convex Grasping

Finally, the last example we look at is that of an object which has a non-convex shape when viewed from the perspective of the Kinect, a dumbbell. In terms of our sensing system, this object must have both translational and rotational accuracy when grasped, as the object must be grasped within a certain range transitionally, and must also be grasped within a certain range rotationally. In the video to the left, we see our system is able to grab the dumbbell, showing robustness to both rotational and translational actuation, as well as robustness in sensing as the shape is not of the usual class of an object.

Conclusion

In terms of the final product we were able to deliver, our solution was able to meet the design criteria we first posed, and was able to go above and beyond in terms of what we set out to accomplish. In particular, one of our main goals of this project was to create a system that was able to grasp not only just a few classes of objects, but a system that was able to attempt and successfully grasp general objects in a variety of orientations and sizes given a few, reasonable constraints.

Our initial design of using the Kinect mounted onto the wrist of the Sawyer also proved to be a good design choice as our image processing pipeline was able to take advantage of the depth data and produce results that were able to reliably provide location and rotation data to our actuation system.

There were many difficulties we encountered throughout the process, but two of the major difficulties were not being able to use the Reflex Takktile Gripper and sensing of objects of nonhomogeneous height. Early on, we had many problems with the Reflex Takktile Gripper in terms of API usage and connectivity, and making the decision to change to the parallel grippers was a huge design decision as we lost much of the data that would be accompanied with the Reflex Takktile Gripper, but also were using an API that was simpler to use and understand. A future goal of this project would definitely be to include the Reflex Takktile Gripper and the data that comes with it. The other problem of sensing objects of nonhomogeneous heights was one that we saw with objects like the Lego block. Because of the way our sensing system is designed, objects which had clear two or three levels of height to them were difficult to work with. Another future goal would be to redesign the sensing system so that it is robust to these types of object.s

Our final product contains only a few slight "hacks" depending on the perspective. One is that we use a hardcoded, thresholded value for our sensing pipeline in order to find the difference between the object and the table. In order to this, we can change the sensing system so that it uses a different metric for determining the difference between the object and the table. The other "hack" is that we use a simple box constraint that is the height of the size of the Kinect in order to prevent the Kinect from colliding with the table by constraining the motion plans returned from MoveIt IK. To address this, we can manually change the URDF of the Sawyer to include the dimensions of the Kinect as part of the end effector itself and then remove the box constraint as MoveIt would now remove the motion plans that cause the robot from hitting itself (since the Kinect would effectively be a part of the Sawyer schematic).

About Us

Hank

About

I'm a third year EECS major who's interested in distributed systems, machine learning, and image processing. I was born in Seattle, and recently have picked up an interest in hiking during my free time. Over the summer, I'm also a big fan of swimming. I'm a bit of a foodie (but it has to be vegetarian or seafood, I'm a pescatarian), and a fun fact about me is that I own a Sous Vide machine, though I haven't gotten much of a chance to use it during the semester.

Contributions

I measured, designed, and 3D printed the parts for the Kinect interface. This website borrows heavily from the styling and general formatting from my personal website, which we used for this project because we weren't huge fans of the default templates. I also wrote the code we used to interface with MoveIt, and contributed to a variety of small miscellaneous things as they came up.

Arsh

About

I'm a third year EECS major who's interested in Mathematics, Machine Learning, and Signals. I was born in Ney York City but grew up in Burbank, California for most of my childhood. My passions include sketching, painting, gymming, and writing music. I also really love reading about math!

Contributions

I mostly worked on designing, formalizing, and implementing our image pipeline to localize objects and determine grasping angles. I also contributed to the design choices for constraints versus generalizability and assisted with integrating the image pipeline into the controller.

Arjun

About

I'm a third year Computer Science major who's interested in signals processing, computational neuroscience, and machine learning. I was born in a small town called Hillsborough in New Jersey and I'm a really big fan of the west coast. I really enjoy taking photos and going on hikes through all the beautiful parks there are in California (we don't have this much beauty back on the east coast). I also draw and play tennis in my free time.

Contributions

I worked mainly on helping design and implement the controller and various design decisions regarding the movement of the robot in certain situations, and I wrote code to interface with MoveIt and for the different constraints on the arm during the various stages of execution. I also helped to fine-tune parameters such as that for the static transform and the ones for conversion between the pixel to analog domain.

Additional Content

CAD Models: (all .step)

(downloads may not work on Chrome)

Our Code:

Other Videos

Miscellaneous

- Our presentation

- We used freenect as our Kinect API, you can find it here